Say Hello to My Friend!

Expand the area of Anime characters to the wide world

Traditionally, Anime’s format and accessibility was limited to one way communication like books or televisions.

Since around 2017, anime has expanded into widely accessible platforms like mobile phones, and its creators have become global. At the same time, 3D modeling and shading have become seamless and polished.

Ultimately, when the 3Dlized anime character popped out to the real world, I was so impressed and motivated to combine my favorites with technology.

That is why I brought an Anime character into interactable technology. I believe the abstraction and emotion have more opportunity to make life and technology joyful and have more explorables than photorealistic ontology.

Make a Sensor

In order to implement face recognition, I had to install MediaPipe but due to its complex setup and unclear documentation, I relied on ChatGPT for guidance. Selecting only what was essential from complex features proved unexpectedly difficult.

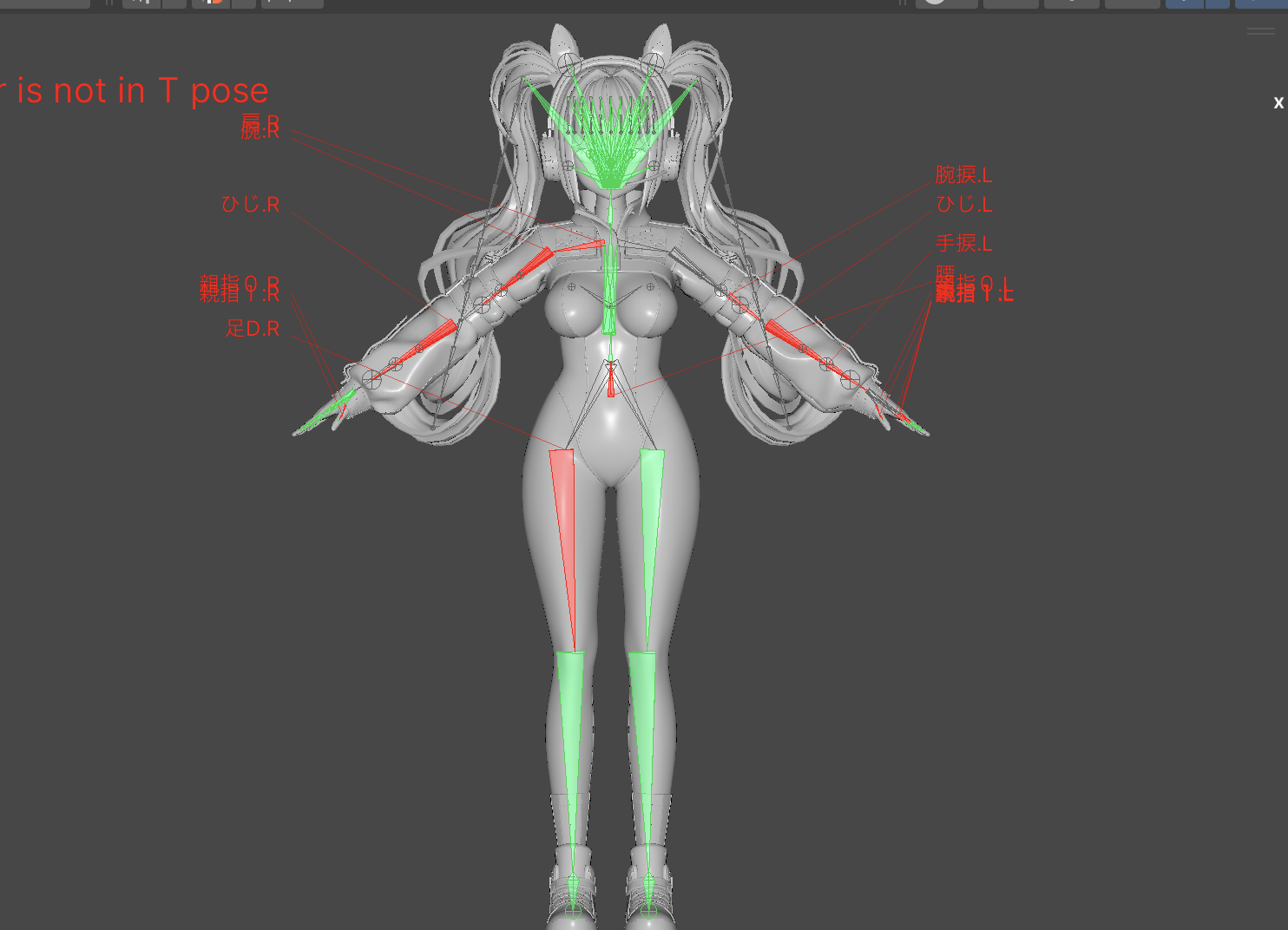

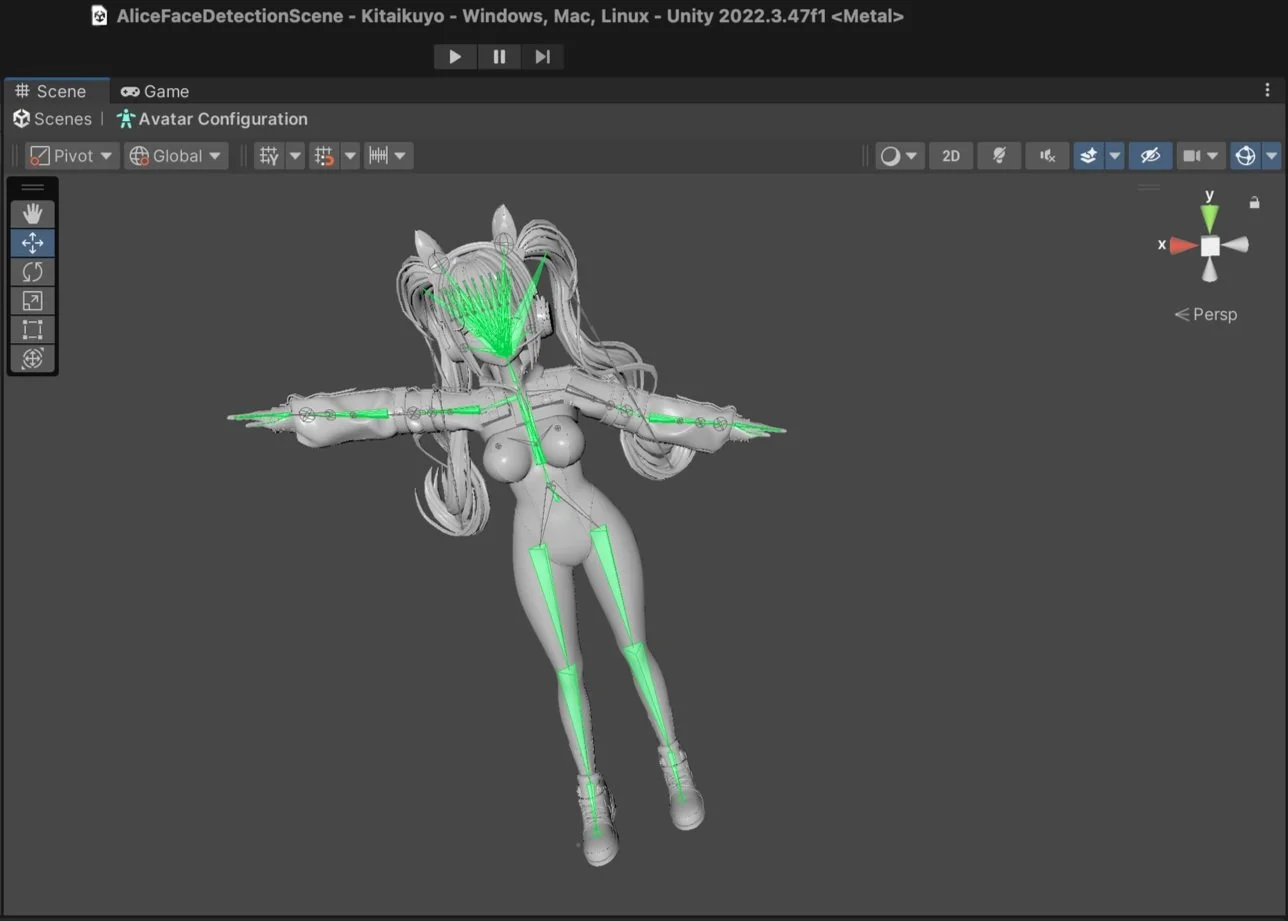

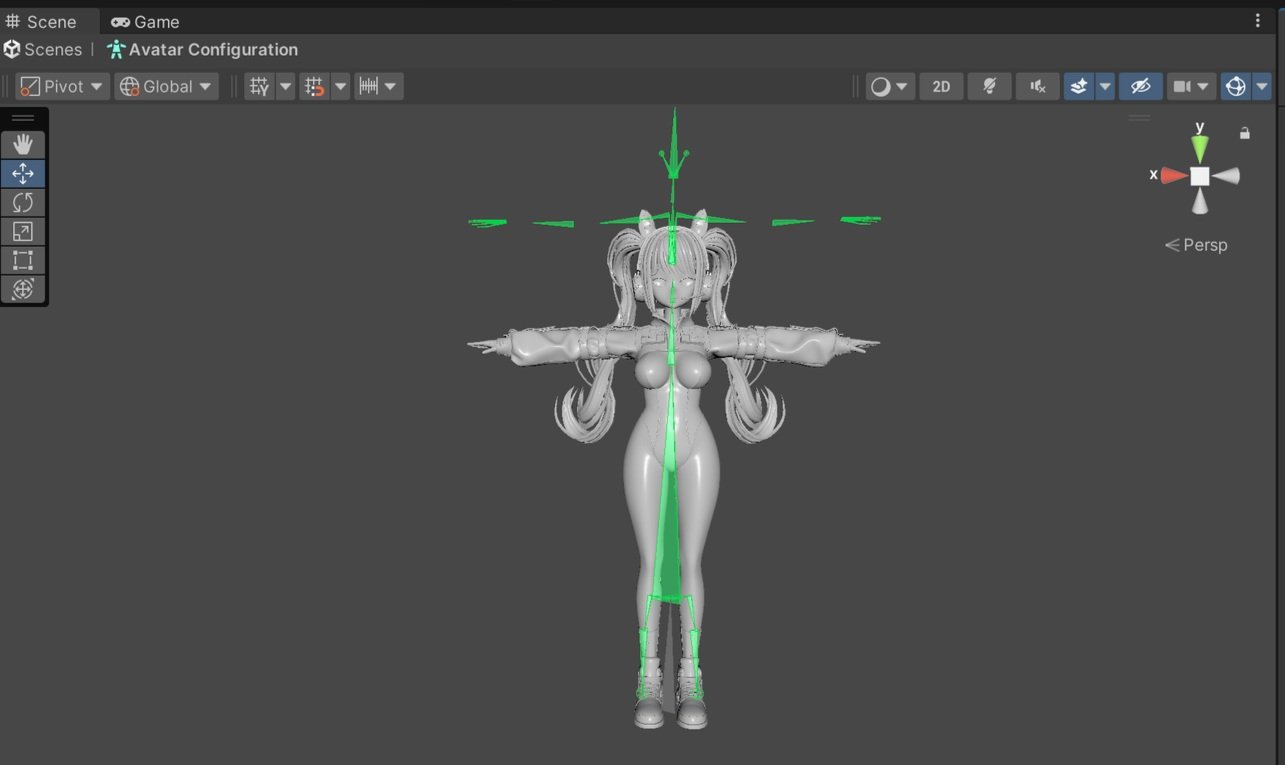

Make It Rigged

Due to my limited modeling skills, I initially tried using an official character file from the game’s website. However, the rigging process proved difficult since the file was built for a specific platform. Even after converting the A-pose to a T-pose, new complications arose. When I was about to give up, I rediscovered a model I had finished during winter break and followed the manual step-by-step. Although the process was time-consuming, it taught me not only about rigging structures and Blender tools, but also the importance of understanding interconnected skills in 3D workflows.

Make It Loop

Initially, I relied on ChatGPT to link multiple scripts for animation triggers, but through faculty feedback, I realized this led to overscripting. I had prematurely leaned on AI out of intimidation by the complexity of game engines—only to be overwhelmed in return. I eventually simplified the logic by letting Mixamo handle movement and rotation, while limiting scripts to simple triggers. However, I discovered that even minor timing offsets—differences of just 1 or 2 degrees—caused unpredictable movement loops. Mixamo’s internal settings also led to coordinate drift, which I stabilized by looping key animations in Unity’s Animator Controller and baking Y-axis data.

Make a World

After rigging and looping basic gestures like greetings and walking, I added HDRI and free Unity environment assets to visually situate the character. This wasn’t just for richness, but to give narrative justification—suggesting who the character is, and what kind of world they inhabit—so that viewers are more likely to feel connected and engaged.

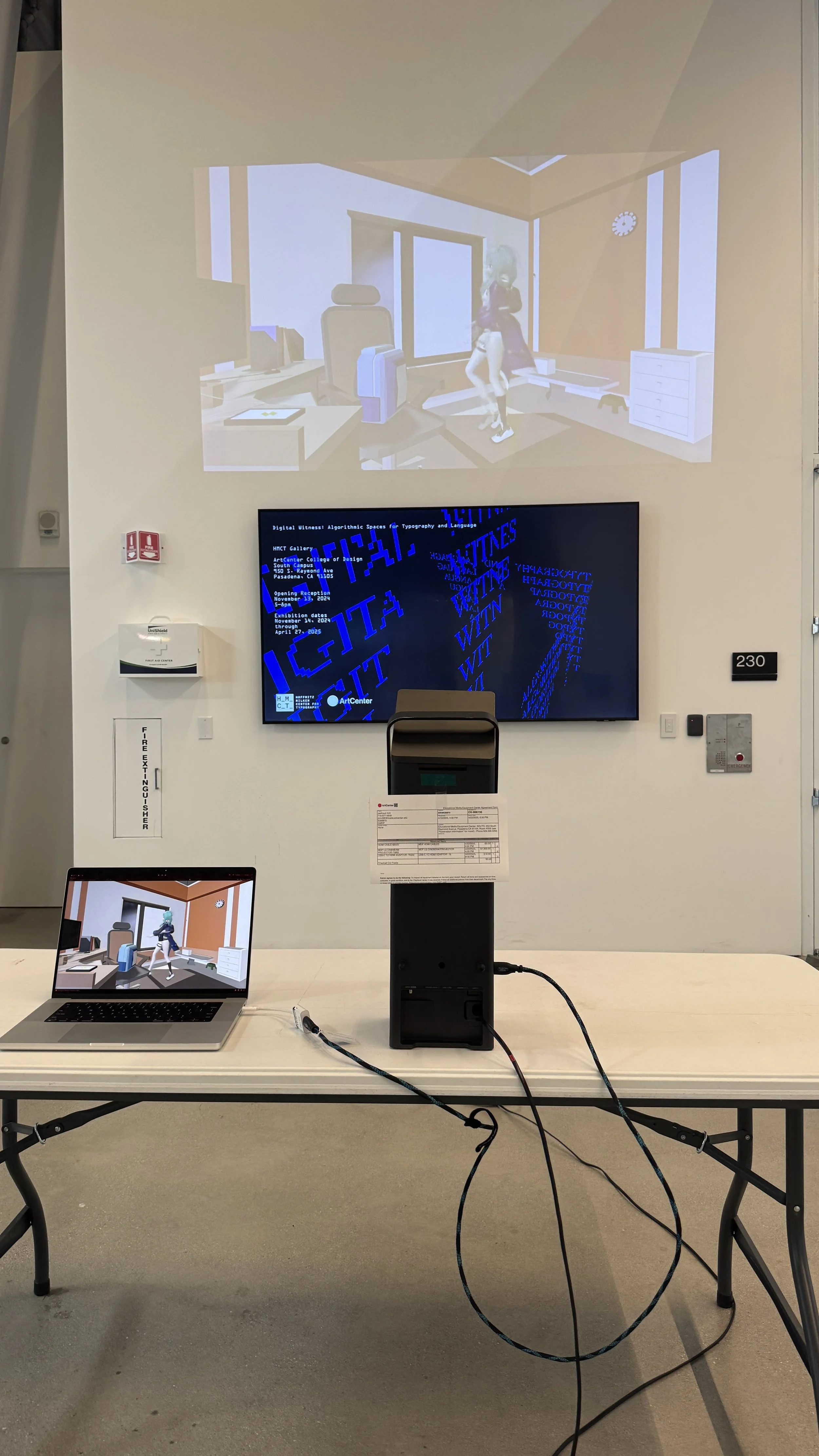

Make It Public

When the project was finally ready, I aimed to scale it up into a public space using projection—hoping to increase visibility and interaction. However, unexpected variables emerged: ambient lighting, installation layout, camera detection range, and pedestrian flow all posed challenges. These factors led to numerous trials and adjustments during the on-site setup process.

Demonstration

Despite countless trials and moments of despair, seeing people pause, approach the camera, and attempt to greet the animated character made everything worth it. At that moment, I felt I had truly touched the dream I had been chasing—it was a deeply moving and indescribable experience.

Scalability

References

Keep studying 3D Modeling with toon-shading, rigging and animation

Convert my own character into 3D then make it playable in a simple Unity playground

Learn Engine fundamentals over GPT-driven overscripting